Huawei's Xu Zhijun: US sanctions on Chinese chips will not be lifted, so how should China respond?

![]() 09/20 2024

09/20 2024

![]() 462

462

Author | Zhang Lianyi

"From a commercial application perspective, no technological advancement has had such a significant impact in such a short period as AI."

On September 19, at Huawei Connect 2024, Xu Zhijun, Deputy Chairman and Rotating Chairman of Huawei, delivered a keynote speech sharing his observations and reflections on intelligence.

He believes that intelligence will undoubtedly be a long-term process, and computing power is the crucial foundation for intelligence, both in the past and the future. "However, we must face the reality that the US sanctions on China in the AI chip sector will not be lifted anytime soon."

"The advanced nature of the chips we can manufacture will be constrained, which is a challenge we must confront when developing computing solutions." Therefore, in his view, only computing power based on practically available chip manufacturing processes is sustainable in the long run; otherwise, it is not.

Huawei's strategic core lies in fully embracing the opportunities presented by AI transformation, leveraging practically available chip manufacturing processes, and collaborating innovatively in computing, storage, and networking technologies to create computing architectures and develop 'super-node + cluster' system computing solutions, thereby continuously meeting computing power demands in the long term.

Meanwhile, Xu Zhijun also noted that while technological breakthroughs in large models have significantly accelerated the intelligence process, not every enterprise needs to build large-scale AI computing power; not every enterprise needs to train its basic large models; and not every application must pursue 'large' models.

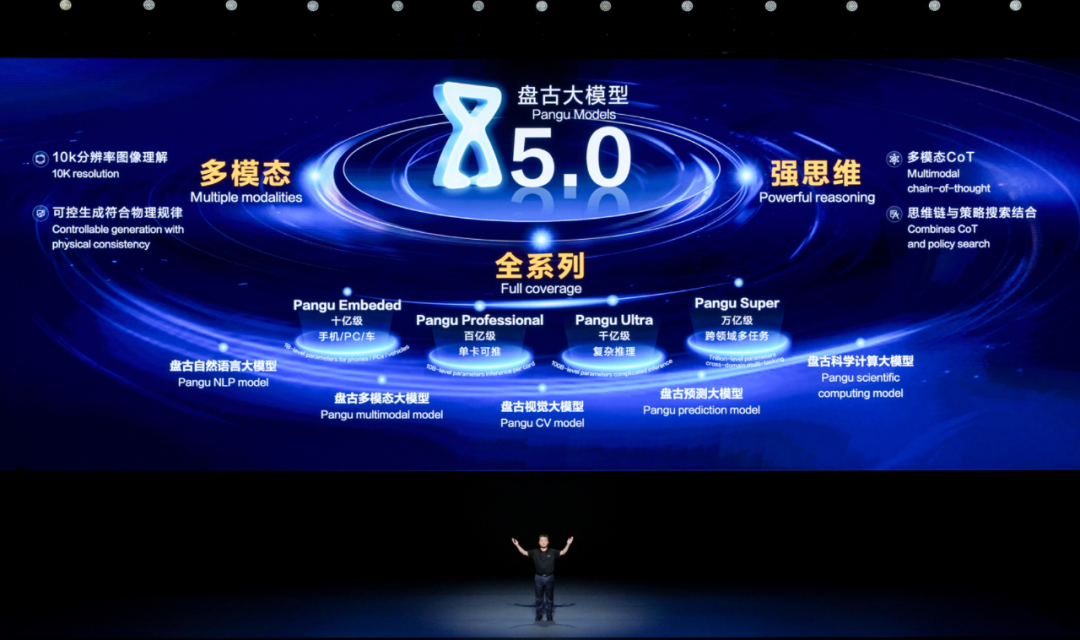

He pointed out, "From Huawei Pangu's industry practices, billion-parameter models can meet the needs of scientific computing, predictive decision-making, and other business scenarios; while ten-billion-parameter models can cater to various domain-specific scenarios, such as NLP, CV, and multimodal applications; for complex NLP and multimodal tasks, trillion-parameter models can be employed."

01 'Not every enterprise needs to build large-scale AI computing power'

'The sustainability of intelligence begins with the sustainability of computing power.' Xu Zhijun candidly admitted that computing power relies on semiconductor processes, but we must confront the reality that US sanctions on China in the AI chip sector will not be lifted anytime soon, and China's semiconductor manufacturing processes, also subject to US sanctions, will lag behind for a considerable period, implying that the advanced nature of the chips we can manufacture will be constrained. This is a challenge we must confront when developing computing solutions.

Huawei recognizes both challenges and opportunities. As AI emerges as the dominant demand for computing power, it is driving structural changes in computing systems, necessitating system computing power rather than just single-processor computing power. These structural changes offer opportunities to pioneer an independent and sustainable path for the development of the computing industry through architectural innovation.

Huawei's strategic core lies in fully embracing the opportunities presented by AI transformation, leveraging practically available chip manufacturing processes, and collaborating innovatively in computing, storage, and networking technologies to create computing architectures and develop 'super-node + cluster' system computing solutions, thereby continuously meeting computing power demands in the long term.

Advancements in computing power and technological breakthroughs in large models have significantly accelerated the intelligence process. For some time, nearly every industry has been talking about large models, building AI computing power, and training large models.

In response to this phenomenon, he proposed three points for consideration:

1. Not every enterprise needs to build large-scale AI computing power.

On the one hand, AI servers, especially AI computing clusters, differ significantly from general-purpose x86 servers, requiring stringent conditions for data center environments such as power supply and cooling. As large models continue to grow, AI computing power will also scale up, with rapid changes necessitating frequent upgrades to AI servers. This leaves data centers facing a dilemma of either waste or inadequate capacity.

On the other hand, the AI hardware industry is rapidly iterating, with new products introduced every one to two years on average. Compared to public clouds, enterprises are limited by their smaller computing power scales and struggle to utilize each generation of AI hardware independently due to the rapid evolution of large models. Instead, they often resort to mixed usage of multiple generations of products for model training, leading to complex resource scheduling and potential performance bottlenecks caused by the 'weakest link' effect across generations.

Furthermore, the operational and maintenance challenges posed by AI technology, which is still in its growth stage with rapid technological changes and coexistence of multiple generations of products, require high technical skills. This poses a significant challenge for many enterprises with traditional IT maintenance capabilities. "Given that these challenges will persist for some time, I believe every enterprise should consider an appropriate approach to acquiring AI computing power rather than merely building its own."",2. Not every enterprise needs to train its basic large models.

Training basic large models crucially depends on data, and preparing sufficient high-quality data poses a significant challenge. Pre-training data for basic large models can reach the order of 10 trillion tokens, which not only entails high costs for enterprises but also presents challenges in acquiring sufficient data volumes.

Simultaneously, model training is difficult due to the continuously increasing number of parameters in basic large models, making model iteration and optimization challenging and often requiring months to years to complete. Each enterprise should focus on its core business, as training basic large models can hinder AI's ability to empower core business operations promptly.

Moreover, attracting talent is difficult as the relevant technologies involved in basic large models are constantly evolving, and experienced technical experts are scarce. For enterprises, building a sufficient pool of technical talent resources is also a challenge.

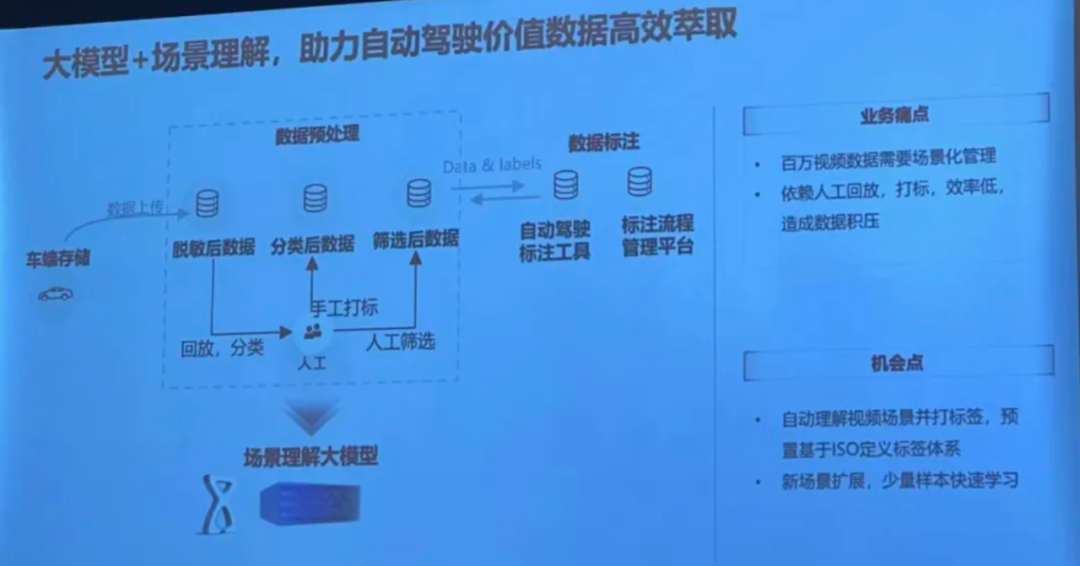

Huawei Cloud Pangu Large Model Enabling Scene Understanding",3. Not every application needs to pursue 'large' models. Based on Huawei Pangu's industry practices, billion-parameter models can satisfy the needs of scientific computing, predictive decision-making, and other business scenarios, such as rainfall prediction, drug molecule optimization, and process parameter prediction. They are also widely used on end devices like PCs and mobile phones. Ten-billion-parameter models cater to various domain-specific scenarios, including NLP, CV, and multimodal applications, like knowledge Q&A, code generation, customer service assistants, and security inspection. For complex NLP and multimodal tasks, trillion-parameter models can be employed. Therefore, Xu Zhijun believes that enterprises should select the most suitable models based on their different business scenarios, combining multiple models to solve problems and create value.

02 'Cloud services are a more rational and sustainable choice'

Based on these judgments, Xu Zhijun offers a new perspective for enterprises lacking the capability to build their AI computing power and train basic large models in-house: choose cloud services.

On-site, he promoted Huawei Cloud: Addressing the aforementioned challenges, Huawei Cloud has upgraded its full stack for AI, enabling every enterprise to train and apply models efficiently on demand.

He further elaborated: Firstly, by continuously developing Ascend Cloud Services, Huawei Cloud allows enterprises to access robust AI computing power with just one click, eliminating the need for data center modifications, self-built facilities, or AI computing infrastructure operations and maintenance. Through end-to-end collaboration in computing, storage, and networking, Huawei Cloud has achieved uninterrupted training of trillion-parameter models in the cloud for 40 days.

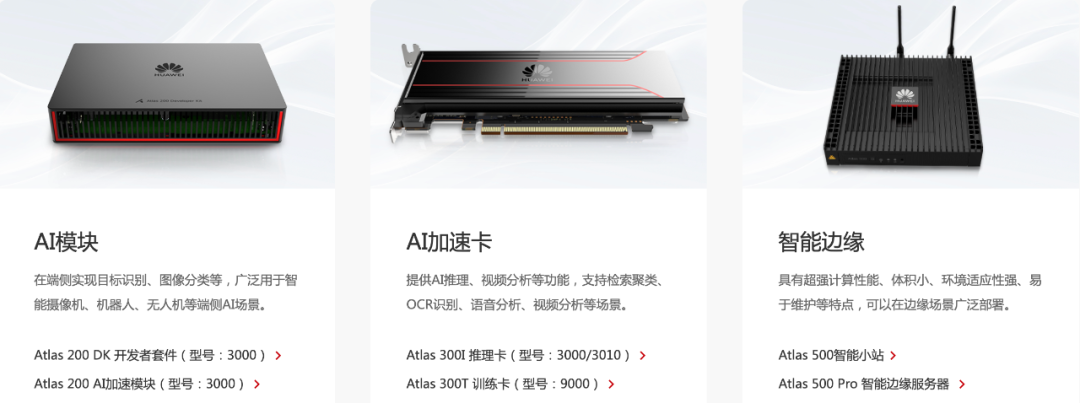

Some of Huawei's Ascend Computing Products",Secondly, Huawei Cloud has upgraded its ModelArts service to support industry-standard basic large models out of the box, including Pangu, open-source, and third-party models. This eliminates the need for enterprises to prepare vast amounts of data and iterate through training for basic large models. ModelArts also provides a one-stop toolchain for model fine-tuning, deployment, and evaluation, lowering the technical barriers for enterprises to fine-tune and incrementally train models.

Concurrently, Huawei Cloud is fully committed to developing Pangu 5.0, supporting a full range of models, including billion-scale, ten-billion-scale, and trillion-scale ones, optimally tailored to the diverse scenarios of enterprises. Through the BaiMoQianTai community, over 100 large models are provided to offer enterprises a richer selection.

In summary, Xu Zhijun believes that cloud services are the optimal choice for many enterprises pursuing intelligence. Through Huawei Cloud's Ascend Cloud Services and Model Cloud Services, we aspire to enable every enterprise to access AI computing power on demand and efficiently train and apply models.

Pangu Large Model 5.0 Launched in June 2023",Certainly, training and inferencing large models in the cloud introduce new security challenges. To address these, Huawei Cloud has undertaken a series of initiatives. In terms of security philosophy, Huawei Cloud adopts a 'defense against extreme attacks' mindset, constructing seven layers of defense—physical, identity, network, application, host, data, and operation—and a Security Operations Center (SOC) based on zero trust. This successfully withstands up to 1.2 billion attacks daily, ensuring business continuity, data integrity, and regulatory compliance. In terms of security mechanisms, Huawei Cloud offers tiered cloud services to build secure digital spaces for customers, supporting physical or logical isolation, transparent and auditable cloud platform operations, ensuring peace of mind for customers. In terms of security technology, Huawei Cloud provides end-to-end full-stack data security protection, safeguarding data throughout its lifecycle, as well as data flows, large model training, and inference. It also ensures end-to-end security and compliance for training data and generated content. In terms of intellectual property, if content generated using Huawei Cloud's large model services infringes upon third-party intellectual property rights, Huawei will defend customers at its own expense and compensate them for any losses, costs, or expenses incurred due to final court judgments or settlements with third parties. Specific details are subject to contractual agreements. 03 'Terminal AI should be experience-centric, not computing power-centric'

Regarding intelligence specifically in terminals, Huawei has undertaken a series of strategic layouts.

Xu Zhijun noted that Huawei was among the first to introduce AI into smartphones. Back in 2017, Huawei's Mate10, equipped with an AI chip, applied AI capabilities such as AI smart imaging and AI translation to mobile phones for the first time, ushering in the Mobile AI era.

Today, as AI enters the large model era, Huawei has deeply integrated AI technology with the HarmonyOS operating system based on an end-chip-cloud collaborative architecture, reconstructing HarmonyOS's native intelligence centered on AI. This achieves comprehensive intelligence from the kernel to system applications, fostering a more open ecosystem collaboration and more reliable privacy and security protection.

In the future, Huawei will upgrade 'Celia' to an intelligent agent based on HarmonyOS's native intelligence, enabling more natural multimodal interactions and comprehensive perception, accurately understanding users, the digital world, and the physical world to provide users with intelligent and personalized services across scenarios.

Concurrently, focusing on consumers' needs across work, study, life, and entertainment scenarios, Huawei will collaborate with HarmonyOS ecosystem partners to build intelligent capabilities for future products. It will also fully open AI model capabilities and AI widgets, empowering third-party applications and fostering a thriving HarmonyOS native application ecosystem.

"We have also observed that incorporating AI capabilities into various terminals has become a prevalent trend, such as creating AI Phones and AI PCs. Consequently, there are diverse voices in the industry regarding how to define intelligent terminals in the AI era," said Xu Zhijun. "We consistently believe that consumer experience takes precedence. Consumers find it difficult to comprehend chip processes, computing power measured in TFLOPS, or model parameter counts. Instead, they prioritize tangible user experiences. Therefore, we advocate that terminal AI should be experience-centric rather than computing power-centric."

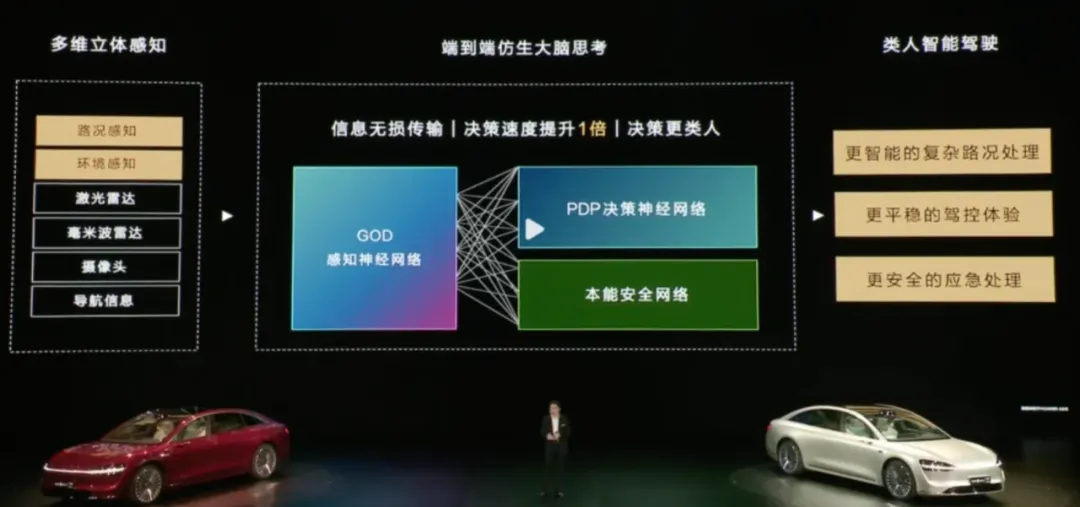

Autonomous driving solutions for automobiles are also a crucial area where Huawei initially invested in AI, as autonomous driving—aiming for driverless capabilities—is one of the most challenging AI application scenarios. To this end, Huawei has introduced the ADS 3.0 version, enabling 'one-click' navigation from parking space to parking space, seamless integration across public roads, park areas, and underground parking spaces, and further upgrading the omnidirectional collision prevention system to cover more speed ranges and achieve omnidirectional obstacle avoidance.

HUAWEI ADS 3.0 Solution",In Xu Zhijun's view, Chinese consumers are already quite familiar with intelligent driving in automobiles, with a high proportion opting for high-end intelligent driving versions when purchasing new cars. The intelligent driving capabilities of automobiles have also become a crucial factor for Chinese consumers when purchasing new vehicles. Moving forward, based on fusion perception, Huawei will continuously evolve its autonomous driving solutions, gradually enabling: - On highways, drivers can rest as soon as they enter the vehicle, ensuring a peaceful long-distance journey; - In urban and suburban roads, driving will be effortless, safe, and stable, comparable to that of experienced drivers; - In rural and mountainous areas, drivers can navigate all terrains and weather conditions with confidence; - In parking scenarios, drivers can leave their vehicles immediately upon parking, without fear of scratches or deadlocks; - In terms of safety, Huawei aims to achieve omnidirectional and proactive safety, primarily eliminating primary collision responsibilities and mitigating secondary responsibilities. Based on the achievement of these key scenario goals, he hopes to realize driverless capabilities by around 2030.