Revolutionizing Edge AI: DeepSeek Paves the Way for Real-World Implementation

![]() 02/21 2025

02/21 2025

![]() 423

423

In our previous article, "From Additional Features to Reconstructing Product Value, Edge AI Will Redefine Wearable Devices," we discussed how intelligent terminals are leveraging local AI to enhance product value, transitioning AI from a mere "additional feature" to a "core capability" through hardware and software collaboration. This shift signifies that edge AI will soon become the defining factor for intelligent terminal devices.

The transition from cloud-based generative AI to edge AI implementation has seen upstream and downstream vendors exploring hardware innovation, edge-side algorithm model optimization, and scenario implementation coordination over an extended technical cycle. As AI moves from the cloud to the edge, how can terminal devices truly become "intelligent"? The emergence of DeepSeek provides a compelling answer. Its "low-cost, high-performance, open-source" advantages illuminate the vast potential of edge AI, freeing it from the constraints of hardware computing power and energy efficiency. The feasibility of deploying small models, reconstructed from large models via distillation technology, on edge devices has significantly increased.

DeepSeek R1's published compact models demonstrate a substantial reduction in model parameters while maintaining performance. This reduces the complexity of deploying edge models and overcomes previous obstacles such as storage space, computing power consumption, and inference delay. Renowned analyst Ming-Chi Kuo recently predicted that the popularity of DeepSeek will accelerate the trend towards edge AI.

With DeepSeek's support, the imagination space for edge applications continues to expand. Particularly at this nascent stage of edge AI, AI module vendors are actively deploying DeepSeek to help downstream terminal customers build local intelligence. The integration of modules with DeepSeek enables small and medium-sized vendors downstream in the industry chain to rapidly integrate AI capabilities and launch their respective terminal products. AI modules are thus breaking through the final hurdle in DeepSeek's real-world implementation, poised to rapidly proliferate to terminals this year.

DeepSeek: A New Engine for Edge AI

Since its inception, DeepSeek has garnered significant attention from the tech community, with chip vendors, module vendors, software vendors, solution providers, and vertical application terminal vendors all scrambling to join the DeepSeek ecosystem. What sets this globally phenomenal model apart? Especially for edge applications, how does DeepSeek differ from previous models?

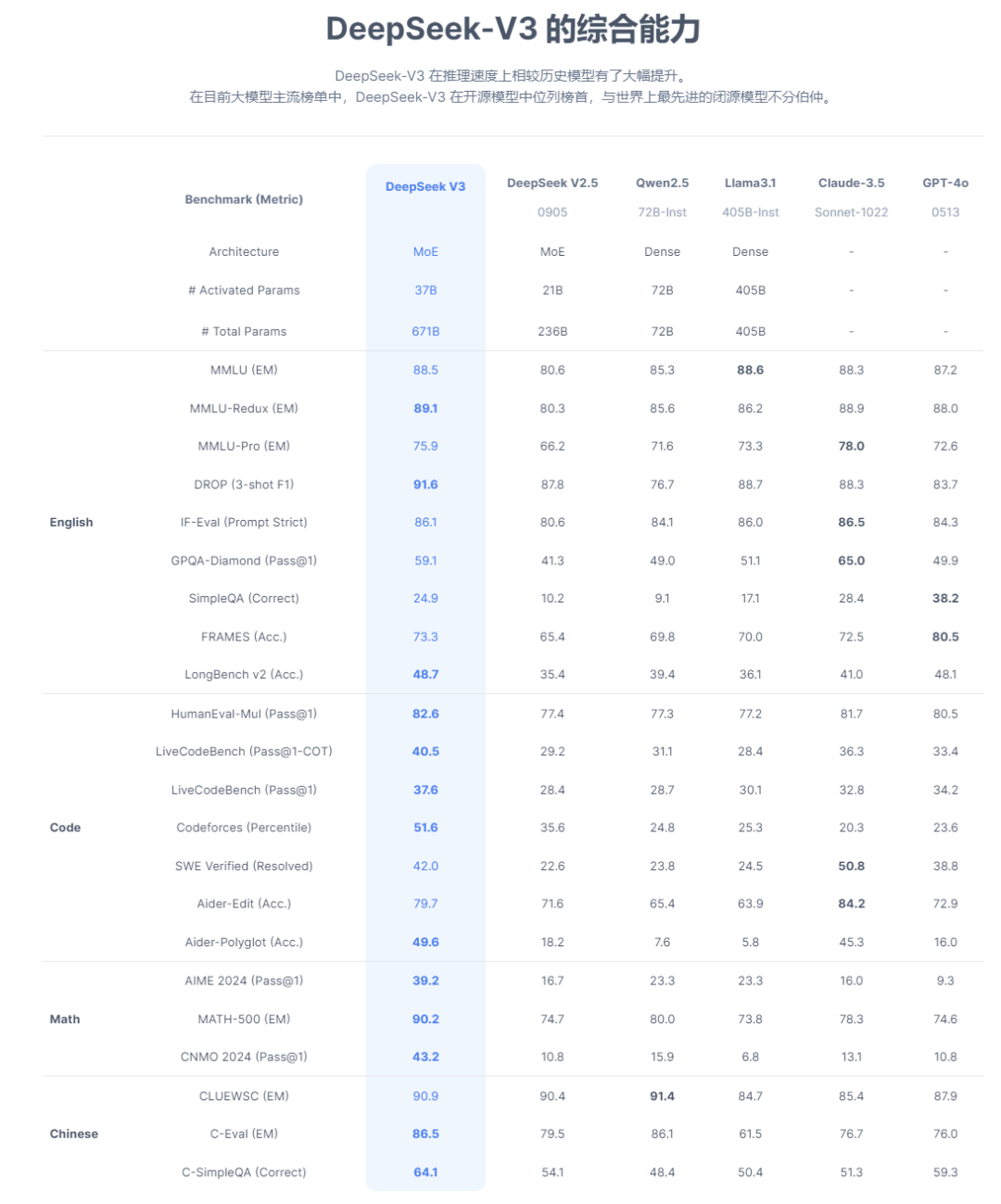

Source: Deepseek

Firstly, DeepSeek's lightweight model design stands out. It employs the proprietary DeepSeekMoE architecture, which reduces knowledge redundancy among experts and replaces the traditional Transformer's feedforward network (FFN) with a sparse mixture of experts (Sparse Mixture of Experts) layer. Each token activates only a small number of experts, significantly reducing computation and memory usage. The actual activated parameter amount of the 671B parameter model is only 37B, minimizing the demand for inference resources. This enables AI to run on terminal devices with limited computing resources, supporting a seamless transition from ultra-large-scale models to edge devices. It is highly operational in localized deployment scenarios such as mobile phones, PCs, AR/VR wearables, and automobiles.

Secondly, the Key-Value (KV) mechanism in large model inference processes poses a significant bottleneck. DeepSeek's innovative MLA mechanism overcomes this by reducing KV cache by about 90% compared to traditional MHA through low-rank joint compression of key-value pairs, enhancing inference efficiency. While maintaining performance, MLA reduces dependence on memory bandwidth, achieving a more thorough lightweight design suited for edge device deployment.

In terms of model distillation and local deployment, DeepSeek offers various distilled models, such as the 1.5B version of R1, fully capable of running on resource-constrained hardware. For instance, a PC can complete basic inference tasks with only 1.1GB of memory, vastly expanding AI application scenarios.

DeepSeek also excels in low power consumption optimization, significantly reducing power consumption in parallel computing and communication optimization. It adopts DualPipe pipeline parallel technology, overlapping the computation and communication stages of forward and backward propagation to minimize GPU idle time. Combined with 16-way pipeline parallelism, 64-way expert parallelism, and ZeRO-1 data parallelism, it substantially lowers energy consumption. Furthermore, DeepSeek supports FP8 mixed-precision training, employing Group-wise and Block-wise quantization strategies for activations and weights, respectively, to perform efficient matrix operations on TensorCore and minimize computational energy consumption. During the inference stage, resource allocation is optimized through the pre-filling and decoding separation strategy.

Lastly, DeepSeek's privacy protection mechanism offers significant advantages over cloud-based solutions. It supports a fully offline local deployment mode, eliminating the need to upload user data to the cloud, thereby avoiding the risk of sensitive information leakage. In terms of encryption and access control, DeepSeek employs a dynamic routing strategy and redundant expert deployment, combined with an access control mechanism, to prevent reverse analysis of internal model data. Simultaneously, API services support key management and usage monitoring to further ensure data security.

Overall, through the MoE architecture and MLA attention mechanism, DeepSeek achieves a more thorough lightweight design with robust performance. It is more flexible and efficient than TensorFlow Lite, which relies on model volume reduction after quantization, and Core ML, which relies on Metal acceleration and hardware adaptation. Coupled with enhancements in low power consumption and privacy protection, these innovative advantages are crucial for edge AI, promoting model proliferation to edge devices and providing a superior solution for diverse AI implementation needs. It is safe to say that DeepSeek is emerging as a new engine for edge AI.

DeepSeek Drives Edge AI Industry Chain Development

Although DeepSeek has been available for a short time, it has quickly become the focus of upstream and downstream vendors in edge AI. In specific edge device fields, mobile phone vendors such as OPPO, Honor, and Meizu have announced their integration with the DeepSeek model. The automotive industry is also fully adapting, with eight automakers including Geely, Zeekr, Lantu, Baojun, IM Motors, Dongfeng, Leap Motor, and Great Wall integrating DeepSeek. In the PC field, domestic GPU vendor Moosys has collaborated with Lenovo to launch the DeepSeek intelligent AIO, and Intel AIPC partner Flowy first supported running the DeepSeek model on the edge side in the latest version of the AIPC assistant. The integration of terminal hardware and DeepSeek is progressing at an astonishing pace.

In the upstream chip field, a recent research report by Citibank analyst Laura Chen's team stated that DeepSeek promotes the low-cost and edge-side implementation of AI technology, reshaping the semiconductor industry landscape. Domestic enterprises in the AI chip industry chain, closely related to models, are responding swiftly. More than a dozen local AI chip vendors, including Moosys, Suiyuan Technology, Huawei Ascend, Haiguang Information, Longxin Zhongke, Tianshi Zhixin, Biren Technology, Moore Threads, Vimicro, and Cloudwalk Technology, have announced their adaptation of DeepSeek models on the cloud or edge side.

For instance, Huawei Ascend has collaborated with DeepSeek to support the inference deployment of the DeepSeek-R1 and DeepSeek-V3 models. Cloudwalk Technology has completed the adaptation of the DeepEdge10 "computing building block" chip platform with the DeepSeek-R1 series of large models, focusing on edge applications. Haiguang Information announced the completion of the adaptation of the DeepSeek V3 and R1 models, the DeepSeek-Janus-Pro multimodal large model, and Haiguang DCU (Deep Computing Unit). The Starlight intelligent series of AI chips under Vimicro Technology is also fully integrating DeepSeek model capabilities to focus on edge applications.

For smart hardware, where costs are most intensive, edge SoCs and ASIC chips will see increased demand for terminal AI deployment with the proliferation of DeepSeek-related edge applications, ushering in more market opportunities. SoC chips from companies such as Hynix Technology, Rockchip, Amlogic, Allwinner Technology, Fullhan Microelectronics, Espressif Systems, Bluetrum Technology, and Actions Technology, as well as edge ASIC products from companies such as ASR Microelectronics and Cambrian AI, are representative examples.

With the implementation of the DeepSeek model on the application side, the demand for storage chips from intelligent terminals is also robust. Taking a typical terminal AI phone representing edge AI and advanced storage technology as an example, also a high-end model requires 8-12GB of DRAM and 128-512GB of NAND Flash. The increasing demand for medium and large NOR Flash capacities in the wearable market is also a deterministic trend. Memory chip vendors such as GigaDevice, Longsys, ProPlus Electronics, and Maxscend are expected to excel in the era of edge AI.

Module vendors are also rapidly promoting the integration of edge AI and DeepSeek. For instance, Megabyte Intelligence is accelerating the development of DeepSeek-R1 applications on the edge side and plans to launch an AI module with 100 TOPS performance in 2025. Quectel, Sierra Wireless, Runsyn Tech, and SIMCom are also promoting the layout of related module products.

Quectel has announced that its edge computing module SG885G, equipped with the Qualcomm QCS8550 platform, has successfully achieved stable operation of the DeepSeek-R1 distilled small model, successfully implementing the DeepSeek model on the edge side. Quectel recently officially announced that its high-performance AI module and solution fully support the small-sized DeepSeek-R1 model, helping customers rapidly enhance terminal AI inference capabilities. Megabyte Intelligence is accelerating the development of DeepSeek-R1 model applications on the edge side and the overall solution combining edge and cloud based on the heterogeneous computing power of the AIMO intelligent agent and high-performance AI module, combined with multiple model quantization, deployment, and power consumption optimization know-how.

Edge AI has become a core driver of intelligent device innovation. The DeepSeek storm has propelled this burgeoning market forward, and the comprehensive terminal AI brought by edge AI + DeepSeek is accelerating. The entire industry chain stands to benefit greatly from this wave. DeepSeek heralds the dawn of the first year of edge AI, with AI modules breaking through the final hurdle in DeepSeek's real-world implementation on the edge side.

Returning to the initial question, how can terminal devices truly become "intelligent" as AI shifts from the cloud to the edge? Judging by current edge applications, DeepSeek is addressing the three challenges faced in the final mile of edge AI implementation: hardware fragmentation, model generalization, and edge-side energy efficiency.

Hardware fragmentation refers to the significant differences in computing power and architecture among various edge devices like mobile phones, cameras, and sensors, making it challenging for traditional AI models to adapt and optimize efficiently. DeepSeek's changes in this regard are beginning to manifest. Firstly, DeepSeek's distilled and quantized edge models achieve hardware-independent lightweight design, supporting a seamless transition from ultra-large-scale models to edge devices, addressing the multi-level hardware requirements of some edge scenarios.

Secondly, by optimizing the model architecture, DeepSeek's dynamic heterogeneous computing framework supports the coordinated scheduling of multiple computing units within edge chips, solving the problem of hardware configuration fragmentation. In this regard, all upstream chip manufacturers have commenced comprehensive promotion of software and hardware collaborative innovation based on DeepSeek. It is believed that subsequent edge chips will effectively address the significant differences in computing power and architecture among various edge devices.

Model generalization refers to traditional models' susceptibility to interference from the ever-changing edge environment. How can models adapt to the complexity and variability of edge scenarios while ensuring edge lightweightness? DeepSeek provides an excellent answer. Its cross-dimensional knowledge distillation system deconstructs large models' logic into thinking and reasoning rather than simple knowledge memorization, then injects it into edge models through dynamic weight allocation. Although the edge model is small, its performance surpasses previous edge models, more comprehensively adapting to vertical edge scenarios.

Edge energy efficiency has long been a challenge addressed by model algorithm vendors and edge hardware device vendors, necessitating sustained collaboration for software and hardware optimization. Deepseek has achieved extreme compression at the algorithmic level, and its customized hardware integration will depend on future adaptation and iteration.

Deepseek's advent has accelerated edge AI development, with the integration of AI modules and Deepseek providing a solution for the final leg of edge AI implementation in real-world industries. For downstream terminal vendors in the edge AI industry chain, particularly small and medium-sized enterprises, the challenge lies in conveniently, swiftly, and efficiently equipping terminal products with local intelligence.

As the capital market buzz surrounding Deepseek subsides, the next phase hinges on real-world industry adoption to drive terminal device upgrades and market expansion. As midstream module vendors closely aligned with terminal devices, integrating AI modules with Deepseek will offer downstream players more precise and efficient edge AI products and services, solving the problem of edge AI implementation in practical settings.

Deepseek seamlessly transfers the reasoning capabilities of large models to smaller, more efficient edge versions, seamlessly integrating them into intelligent modules. For instance, Quectel's AI module SG885G has successfully fine-tuned the DeepSeek-R1 distilled small model based on edge operations, delivering more precise and efficient edge AI services with a generation speed exceeding 40 Tokens/s and room for further optimization. ThisChip Technology's DeepSeek-R1-1.5B model adapted for the edge platform boasts a reasoning speed nearing 40 Tokens/s, while the 7B model reaches 10 Tokens/s. This demonstrates that after incorporating DeepSeek, edge modules have significantly upgraded their reasoning speeds. Edge AI products equipped with Deepseek AI modules can then handle more computational tasks, alleviating the computational burden on cloud servers.

Currently, the officially announced Deepseek module boasts a wide array of application scenarios, spanning diverse fields such as smart cars, machine vision, PCs, robots, smart homes, AI toys, wearable devices, and more. This multi-scenario support enables downstream equipment manufacturers across industries and with varying terminals to fully leverage the local intelligence offered by Deepseek, accelerating terminal intelligence development.

Furthermore, module manufacturers are vigorously promoting Deepseek module products with varying computing powers and power consumptions to cater to the differentiated needs of downstream customers in terms of cost and size. Continuous module optimization for different terminal applications will markedly shorten the deployment cycle of edge-side intelligence-related products, thereby enabling terminal sides to genuinely reap the benefits of AI.

Deepseek provides robust support in addressing fragmentation in edge-side AI hardware, model generalization, and performance bottlenecks. The deep integration of modules with Deepseek paves the way to overcome the final hurdle in edge-side AI implementation. This breakthrough ultimately envisions edge-side AI defining the core functions of terminal devices, truly making terminal hardware intelligent.

In Conclusion

For a considerable time, edge-side models have been a constraint on smart terminal hardware development. However, DeepSeek's emergence is beginning to transform this landscape. In the foreseeable future, Deepseek AI modules tailored for edge-side application development will emerge, offering terminals convenient and efficient AI capabilities. Edge-side AI stands on the cusp of an explosion.