"DeepSeek" in Vehicles: More Than Just a Marketing Gimmick?

![]() 02/20 2025

02/20 2025

![]() 568

568

Introduction

Non-smart cars are almost an embarrassment on the road today.

The popular DeepSeek is comprehensively entering the automotive industry. As of February 12, over 20 automakers and brands have announced their integration with DeepSeek, including mainstream players like BYD, Geely, Chery, Changan, SAIC, Dongfeng, and GAC.

Currently, the computational cost of DeepSeek is a fraction of that of OpenAI. When integrated into automotive products, it significantly lowers the threshold for automakers to upgrade their intelligence. Through a combination of cloud models and localized deployment, automakers can swiftly implement OTA functional updates.

Various automakers have indicated that DeepSeek will enhance intelligent voice capabilities, optimize the understanding of vague intentions, and boost proactive service capabilities, thereby elevating interaction experiences in areas such as navigation planning and entertainment recommendations. Furthermore, the introduction of end-to-end models, with their robust knowledge and reasoning capabilities, can substantially improve the efficiency and quality of automated data generation, accelerating the iteration of high-level intelligent driving functions.

However, with DeepSeek's "entry into vehicles," some industry insiders, particularly those sensitive to marketing, have raised doubts: Is the large model, which has not undergone any adaptive development for in-vehicle systems, merely being used as a bragging gimmick? Can in-vehicle systems' limited computing power handle it?

In fact, such doubts have some merit but are also one-sided.

The merit lies in the fact that in-vehicle systems indeed have relatively limited computing power. Compared to large servers or high-performance computing devices, in-vehicle systems may struggle with complex computational tasks. If the DeepSeek large model has not been specifically optimized and adaptively developed for the in-vehicle environment, direct deployment may lead to inefficient operation and sluggish response.

The one-sidedness stems from the fact that many automakers do not simply deploy DeepSeek onto in-vehicle systems unchanged. Instead, they conduct targeted optimization, adaptive development, or lightweight/customized development.

For example, techniques such as model pruning, quantization, and distillation are employed to reduce the model's computational demands while optimizing input and output logic for in-vehicle scenarios. The knowledge of the DeepSeek large model is compressed into a smaller "student" model, enabling efficient operation on in-vehicle chips.

Can in-vehicle computing power support the operation of large models?

It is undeniable that in-vehicle computing power is limited, especially compared to cloud servers. Directly deploying large, highly complex DeepSeek models onto in-vehicle systems may lead to inefficient operation or even failure to operate normally.

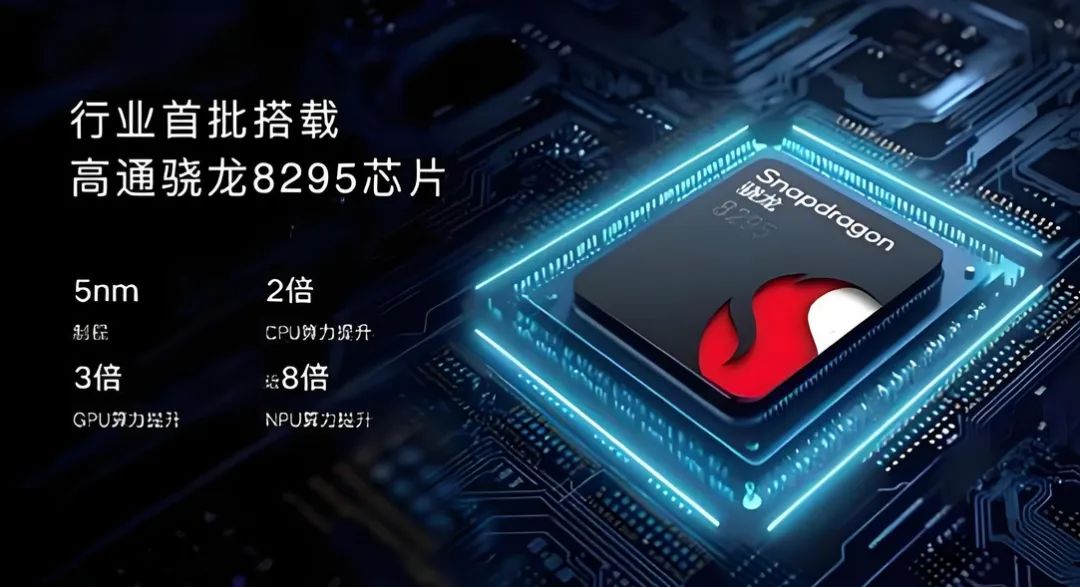

However, automakers typically adopt a "cloud-end collaboration" approach to address computational power issues. Complex computational tasks are handled by the cloud, while in-vehicle systems manage lightweight local tasks (such as voice activation and simple command execution). Additionally, the computational power of in-vehicle chips is continually improving, with some high-end models already equipped with high-performance computing units (like Qualcomm Snapdragon 8295 and NVIDIA Orin), capable of supporting the operation of large models to a certain extent.

It is reported that many automakers have achieved significant results after integrating DeepSeek. For instance, after deeply integrating Geely Auto's self-developed Xingrui large model with DeepSeek-R1, the interaction response speed increased by 40%, and the intention recognition accuracy rate reached 98%. Other automakers such as Dongfeng Motor and VOYAH Auto have also witnessed substantial improvements in the naturalness and scene understanding of in-car voice interaction following the integration of DeepSeek.

Will some automakers "boast"?

If automakers merely mention in their promotions that they have integrated the DeepSeek large model but have not actually conducted sufficient optimization for in-vehicle scenarios or only achieved functionality under specific conditions (like in a laboratory environment), while performing poorly in actual driving environments and failing to provide a stable and smooth user experience, then the doubts raised in reviews are justified.

In such cases, automakers' promotions may be overly exaggerated, failing to truthfully reflect the actual level of technology and thus misleading consumers.

However, if automakers have indeed invested heavily in technical aspects when promoting the integration of the DeepSeek large model, such as model optimization, computational power adaptation, scenario customization, etc., ensuring the model runs efficiently on in-vehicle systems and providing smooth and accurate voice interaction experiences in actual use, then the integration of the DeepSeek large model holds practical significance.

In such scenarios, automakers' promotions are grounded in actual technical strength and are not exaggerated. Consumers can also understand the true performance of the products based on these promotions.

Regardless of whether automakers have integrated DeepSeek, the ultimate criterion is user experience. If users perceive an improvement in the intelligence of voice interaction during actual use, then automakers' promotions are supported by reality. Conversely, if there is no significant improvement in user experience or even issues such as lag and slow response, then the doubts raised in reviews hold more weight.

Why can automakers' products quickly integrate DeepSeek?

An engineer from Dongfeng Research and Development Institute previously explained during a live stream: Based on our existing engineering link for voice interaction in smart cockpits, we inherently possess the basic technical capabilities to combine voice interaction links with large models.

Previously, we may have utilized other large models to empower intelligent functions such as voice interaction within our smart cockpits. When DeepSeek emerged, it was precisely because we had such a technical foundation and engineering capabilities that we were able to quickly introduce the DeepSeek large model.

This is the first level: integrating large models with our existing voice interaction links. On the other hand, the fusion of AI with our functional scenarios and unique features can maximize the capabilities of large models.

The engineer also stated that the current application of DeepSeek in intelligent driving is still in the exploratory stage, as autonomous driving has extremely high requirements for real-time performance and safety. Therefore, we will consider using DeepSeek's deep reasoning capabilities to assist in autonomous decision-making in complex road environments, enhancing users' experience with autonomous driving.

"We believe that a core contribution of DeepSeek is that it reduces the cost of applying large models. Because it is open-source and has made its technical solutions public, the popularization of intelligent functions in automobiles will accelerate, reducing the cost for users and continuously speeding up the iteration of intelligent functions. In fact, we believe it will not increase the cost and price for users but will further allow them to enjoy more cost-effective intelligence."