After taking thousands of photos with the iPhone 16 Pro, I discovered the truth behind Apple's imaging shortcomings

![]() 09/23 2024

09/23 2024

![]() 504

504

Algorithm is the Achilles' heel of Apple's imaging capabilities.

At some point, I found myself growing less interested in Apple's camera capabilities. On the one hand, Apple's advancements in imaging, especially photography, have been minimal in recent years. On the other hand, Android flagship phones have consistently surprised me with their imaging prowess. As a result, despite purchasing a new iPhone almost every year, I often find myself instinctively reaching for my Android phone whenever I need to take photos.

I'm not alone in feeling that Apple's iPhone cameras are mediocre. While Apple has attempted to address this with the inclusion of periscope zoom lenses and new camera algorithms in its recent models, they still pale in comparison to the imaging capabilities of Android flagships.

(Image source: Lei Technology)

You might argue that I'm biased. The iPhone 16 series, released this year, introduced a dedicated camera button as a key selling point. Lei Technology conducted a special review and pointed out flaws in this design, calling it an "innovation for innovation's sake" that may end up being another failed Apple experiment, akin to 3D Touch. So, how does the iPhone 16 series, with its underwhelming imaging interaction upgrades, fare in terms of actual imaging performance? What I'm sharing now is Lei Technology's comprehensive review of the iPhone 16 Pro's imaging capabilities, conducted over an extended period. Since the iPhone 16 Pro we received was not a media unit, our review is truly objective and unbiased, without any consideration for Apple's feelings.

Core specifications finally on par with competitor flagships

If we look solely at specifications, the iPhone 16 Pro's imaging configuration is not weak. With a 48MP main camera, a 48MP ultra-wide-angle lens, and a 5x periscope zoom lens, it holds its own against flagships like the Xiaomi 14 Ultra and Huawei Pura 70 Ultra.

(Image source: Lei Technology)

The iPhone 16 Pro seems to have made some improvements in the zoom lens relay compared to its predecessor. The iPhone 15 Pro used the main camera for cropping when zooming between 2.1x and 2.9x, resulting in compromised photo quality, resolution, and color in this zoom range. The iPhone 16 Pro, with its upgraded 5x zoom lens, performs better between 2.1x and 4.9x, likely due to Apple's camera algorithm optimizations.

According to Apple, the iPhone 16 Pro's night photography capabilities have significantly improved. Thanks to the new chip and computational AI photography, all three lenses can retain more detail in dark areas, capturing clearer and more vibrant photos in low-light conditions. With the support of the LiDAR scanner, it can also achieve night portrait photography.

Based on Apple's official information, it seems that the iPhone 16 Pro's imaging capabilities have finally come into their own. However, after two days of detailed testing, I arrived at a very different conclusion. It appears that "sample photos are just for show" is indeed a truth in the tech community.

Significant improvements in the main camera; the sharpening issue is finally resolved

After not using the iPhone 15 Pro for photography in a while, my first reaction after capturing a set of photos with the iPhone 16 Pro was, "Is this really an iPhone? It's so good now!"

In well-lit indoor environments, the iPhone 16 Pro's main camera retains a wealth of detail in dark areas, with seamless transitions between shadows, eliminating the blurriness and excessive sharpening that plagued previous iPhones. In simple terms, the images are clean and visually pleasing.

(Image source: Lei Technology)

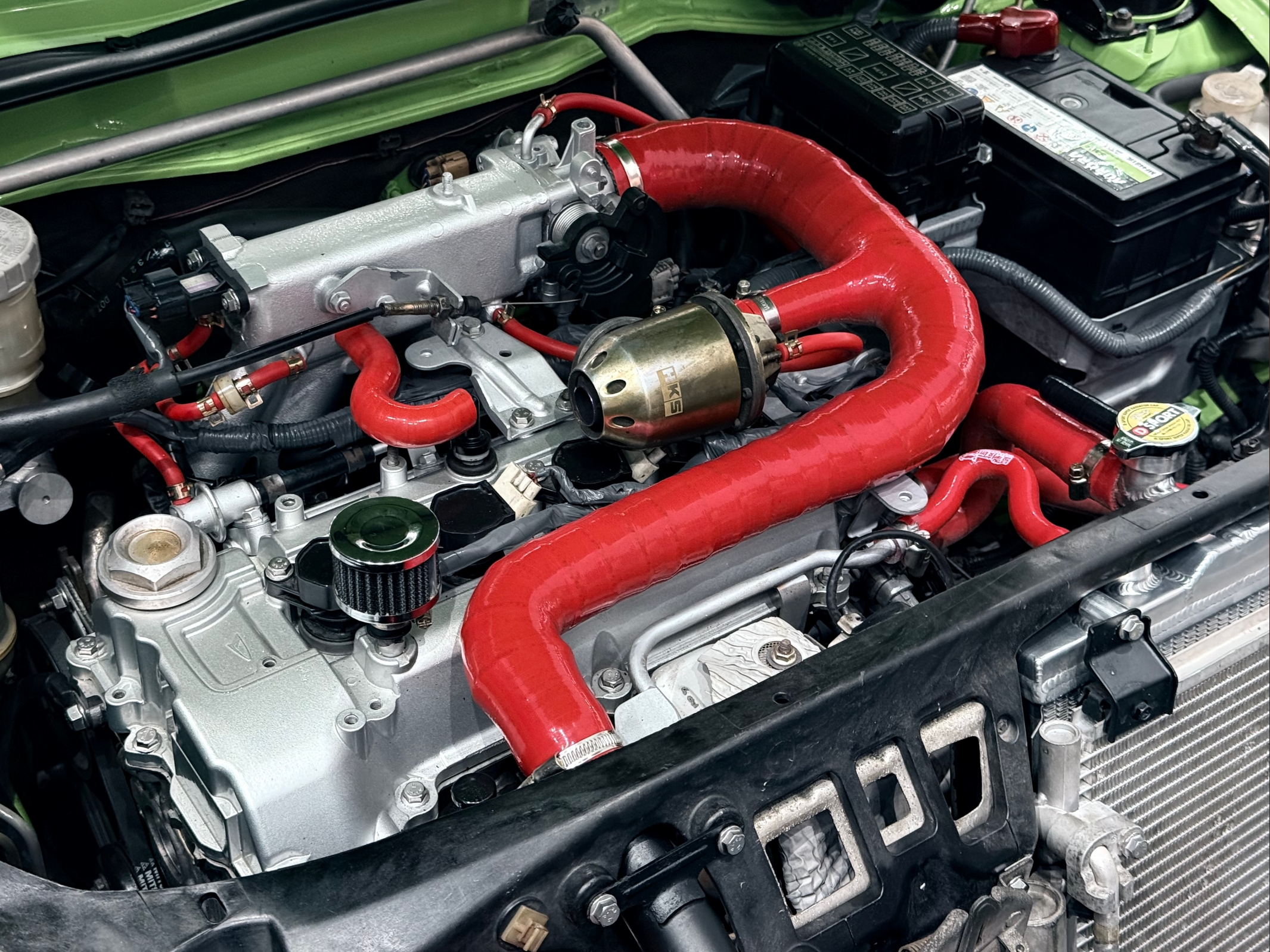

When faced with scenes with complex color elements or bright colors, the iPhone 16 Pro's color reproduction is spot on. Whether it's shiny orange, reflective car paint, or matte surfaces, the iPhone 16 Pro exceeds expectations.

(Image source: Lei Technology)

Many Apple users have described the iPhone's cameras as "realistic," but realism isn't always a good thing in photography. For example, a bright red sports car captured with previous iPhone models would appear duller, but the iPhone 16 Pro captures the vibrant red and enhances it through simple post-processing, making the car appear even more striking.

(Image source: Lei Technology)

The weather in Guangdong has been less than ideal recently, with visibility significantly reduced. However, the iPhone 16 Pro's sample photos still showcase Apple's improvements: almost no sharpening artifacts on leaves and buildings, unlike the blurry or overly sharpened images from previous models. This is a welcome change.

(Image source: Lei Technology)

High-quality telephoto imaging, but significant limitations in zoom range

While I'm quite satisfied with the performance of the main camera, the telephoto lens has its pros and cons.

Starting with the cons. The iPhone 16 Pro's telephoto lens has been upgraded from 3x optical zoom to 5x, which is technically an improvement. However, this comes at the cost of a more practical medium telephoto lens.

It's worth noting that most Android flagships in recent years have opted for a telephoto zoom range of 3x to 3.7x (equivalent to 72-90mm), which is a versatile zoom range suitable for various photography scenarios. When the zoom range is increased to 5x (120mm), its usefulness is significantly limited, except for extreme cases like capturing concerts or menu boards on walls.

(Image source: Lei Technology)

The iPhone 16 Pro falls into this trap, lacking a dedicated lens between 2.1x and 4.9x. Without such a lens, the phone relies on digital zoom by cropping the main camera's image, which compromises image quality. While I don't dispute the inclusion of a 5x telephoto lens, Apple should have also included a medium telephoto lens between 2x and 5x to avoid this limitation.

Now for the pros. I must commend the iPhone 16 Pro's telephoto lens for its imaging quality. Even in poor lighting conditions like rainy days, it produces clean and noise-free images without excessive processing artifacts.

(Image source: Lei Technology)

In terms of imaging quality, I believe the iPhone 16 Pro's telephoto lens has finally caught up with Android flagships, though it hasn't yet surpassed them.

'Night like day': Apple's imaging shortcomings persist

Before testing, I never expected the iPhone 16 Pro to stumble so badly in night photography. The issue wasn't ghosting or backfocus, but rather that the phone turned a dark Guangzhou sky at 9:30 PM into daylight. Without the lighting in the buildings and the timestamp, most readers would mistake the photo for being taken during the day.

(Image source: Lei Technology)

Upon closer inspection, you'll notice that the sky has been significantly brightened and color-corrected, turning the original yellow-black clouds into a bluish-gray, while the foreground buildings remain largely untouched, creating a disjointed effect.

If night mode is disabled and the default camera mode is used, the image quality and noise levels are even more disappointing.

(Image source: Lei Technology)

Trying again in an even darker scene, the iPhone 16 Pro performed surprisingly well in night mode, brightening nearly invisible details without overexposing the scene. While highlight blowout from streetlights remained an issue, the results were generally in line with my expectations for the iPhone's night mode.

(Image source: Lei Technology, top: night mode off, bottom: night mode on)

The iPhone 16 Pro's night photography performance is not entirely flawed, but it's inconsistent. The results can be described as "hit or miss."

In mobile photography, it's difficult to distinguish between devices in bright daylight conditions or portrait mode, where preferences vary. However, night photography and high-speed action shots reveal the true capabilities of a camera. Unfortunately, Apple's iPhone cameras have historically struggled in low-light conditions, often requiring ideal conditions to produce decent night photos. As a new wave of Android imaging flagships approaches in 2024, Apple risks falling further behind if it doesn't update its camera algorithms soon.

Restoring images with algorithms: Apple following in Android's footsteps

As I thought my review of the iPhone 16 Pro's imaging capabilities was wrapping up, I stumbled upon an interesting sample photo. At first glance, it seemed flawless, but upon closer inspection of the sticker in the top right corner, I noticed that the printed text had been rendered unrecognizable.

(Image source: Lei Technology Productions)

This phenomenon was very common on early Android phones. Many manufacturers used AI algorithms to guess and restore elements in the image to enhance their telephoto performance, but when faced with intricate text, they often made big mistakes. However, after years of technological accumulation, Android flagship devices have basically solved this problem.

It seems that Apple is now following the same path as Android manufacturers in terms of imaging. To verify whether Lei's conjecture is correct, Lei photographed the same set of objects using the iPhone 16 Pro and vivo X100 Ultra. It was clearly visible that while there was a little pixel guessing on the vivo side, it basically did not affect the recognition of the text. In contrast, there was a large clump of letters blurred together on the Apple side, making it impossible to recognize the word.

(Image source: Lei Technology Productions, top is vivo, bottom is iPhone)

This problem is far more serious than readers might think. As mentioned earlier, the main shooting scenarios for a 5x telephoto lens are distant menus or PPTs. If the lens cannot even accurately restore basic text, what is the point of its existence?

Finally, regarding the image quality evaluation, Lei would like to add one more old issue: Apple's official website states that the iPhone 16 Pro's lens uses a new coating that can effectively improve anti-glare performance, but there is no mention of ghosting. Actual tests have shown that the iPhone 16 Pro still exhibits "ghosting" when facing bright light sources, which is very noticeable and affects composition and post-processing. It seems that Apple's ghosting issue will not be resolved in the short term.

(Image source: Lei Technology Productions)

With poor image quality, will Apple rely on interaction to make a breakthrough?

Lei feels that with the iPhone 16, Apple seems to have accepted the objective fact that its image quality cannot catch up with Android flagships. Its strategy is to compensate for insufficient image quality with enhanced interaction. For example, the entire lineup offers independent camera control buttons, providing users with a new option for photography and videography interaction - of course, this is something that Android phones have already tried and abandoned, but the iPhone 16 has a "micro-innovation" with a different implementation. Lei predicts that competitors will soon follow suit and introduce Android phones with independent camera buttons.

Processing speed remains a major advantage of the iPhone's imaging capabilities. The official announcement states that the sensor has been upgraded in terms of processing. Lei conducted a simple test and found that whether it was daily snapshot capturing, continuous shooting, or taking multiple ProRAW photos, the iPhone 16 Pro was significantly faster than the iPhone 15 Pro.

Especially when taking ProRAW photos, which require more data processing, the iPhone 16 Pro was able to process the photo and take the next shot while the iPhone 15 Pro was still spinning in the viewfinder (in the same amount of time, the iPhone 16 Pro took 16 photos, while the 15 Pro took only 11). From this perspective, the improvement in the iPhone 16 Pro is indeed significant, as snapshot and continuous shooting are the most important aspects of mobile photography. It allows us to quickly capture beautiful moments that traditional cameras cannot match.

(Image source: Lei Technology Productions)

However, the iPhone 16 Pro has not achieved perfection in imaging interaction. This time, a new style and tone function has been added, allowing users to adjust the tone of photos directly on the camera page. This is not simply applying a filter but adjusting parameters manually. However, the learning curve is steep, and users who are not photography enthusiasts may find the myriad of parameters confusing. It is hoped that Apple will introduce simpler introductory tutorials in the future rather than overwhelming users with a myriad of style parameters to figure out on their own.

(Image source: Lei Technology Productions)

Conclusion: Algorithms are the Achilles' heel of Apple's imaging capabilities

As usual, the following is a summary of the iPhone 16 Pro review based on the Lei Technology template:

Advantages:

1. Improved sharpening and smear issues resolved well;

2. Excellent telephoto shooting performance on par with Android flagships;

3. Fast processing speed for snapshot and continuous shooting;

Disadvantages:

1. Poor night mode performance;

2. Severe pixel guessing issues with algorithms;

3. Lack of a medium telephoto lens;

4. Ghosting issues still persist;

5. Room for improvement in interaction.

Special note: This iPhone 16 Pro imaging review focuses mainly on still images (photography), and does not cover video recording, which is Apple's strength.

After reviewing a series of sample images, Lei believes that there is truly no issue with the hardware of the iPhone 16 Pro. The shortcoming in imaging cannot be blamed on Apple's reluctance to invest in materials. Judging from strong light environments, low-light scenes, and some detailed issues, it is clear that Apple's algorithms significantly lag behind those of Android. There has long been a misconception in the industry that Apple's imaging configuration is average, but its algorithms are powerful. From now on, please refrain from saying that Apple's imaging algorithms are powerful.

Lei has seen similar issues with imaging algorithms in previous Android flagships, but manufacturers have provided good solutions, as algorithms are constantly evolving. Why is Apple repeating the same mistakes? The root cause lies in Apple's early lack of emphasis on camera algorithms, as it believed that "authenticity" was most important.

However, in the era of computational photography, algorithms are essential for photography. DJI has emerged as the biggest dark horse in the imaging industry in recent years, largely due to its powerful stabilization and image transmission algorithms. Insta360, the leading domestic action camera brand, has surpassed GoPro, also thanks to its powerful algorithms. The rapid advancement of Android imaging flagships is similarly driven by algorithms. As computational photography becomes increasingly prevalent, Apple has finally realized its shortcomings and is making amends.

However, technology never sleeps, and imaging algorithms are constantly learning and evolving. Competitors will not stop waiting for Apple to catch up. How can Apple's imaging algorithms catch up with those of its competitors? Lei recalls that when Apple was strategically positioning "Apple Intelligence," it had to seek help from OpenAI and other external sources due to its inadequate large model algorithm capabilities. Who can Apple turn to for help with its imaging algorithms? Please, let's not see another co-branding effort after Leica, Hasselblad, and Zeiss; that would be too boring.

Source: Lei Technology