Does DeepSeek Stall the Construction of Multiple AI Computing Centers? Industry Insight Unveils Seven Key Points

![]() 02/19 2025

02/19 2025

![]() 523

523

Highly accessible and cost-effective large models have sparked an explosion in demand from ordinary enterprises and individuals. The next six months will serve as a critical application verification period.

Recent articles have claimed that "DeepSeek has halted the construction of multiple AI computing centers," but numerous industry insiders disagree. Post-Spring Festival, many enterprises, particularly listed companies, are integrating DeepSeek, and the upcoming months will be a period of application verification.

Feedback from the industry suggests that this year's domestic AI computing market will exhibit several notable characteristics:

1. The number of large AI computing orders in the training market will decline, and decisions on large AI computing center projects will be postponed for six months to a year.

2. In the inference market, highly accessible and cost-effective large models have ignited a surge in demand from ordinary enterprises. Cloud giants' inference services are expanding rapidly, and there will be a boom in the private deployment of 1 to 20 servers within enterprises.

3. Computing power demand is shifting from centralized to fragmented, yet the overall AI computing market is still anticipated to grow. The construction of AI computing centers will not cease.

4. The reduced demand for computing power in inference favors domestic computing power. Some previously unoccupied domestic AI computing centers are expected to become operational.

5. The urgency for AI computing centers to adopt liquid cooling technology has diminished.

6. NVIDIA's share price is "tied" to GPT5, and domestic market demand remains robust.

01

Large AI computing orders to be on hold for over six months

"Large AI computing orders will significantly decrease," a senior server industry expert told Digital Frontier.

In the training market, while internet giants will not readily cede the large model market to DeepSeek, most enterprises, except for the top few, will focus on application development. In application development, the extent of secondary development is crucial. If only fine-tuning of large models is required, large-scale computing power is unnecessary.

DeepSeek has shattered previous misconceptions by reducing computing power consumption, paving a new path for the industry. "This is undoubtedly good news," an AI computing expert told Digital Frontier. "If it truly followed the path of unlimited computing power expansion, consuming the nation's entire electricity supply, it would indeed be unreasonable. The industry should not have been like that in the first place."

There is a consensus in the industry that the rush to build clusters of 100,000 or 500,000 GPUs, fueled by OpenAI, is now less pressing.

Furthermore, decision-making for previously blindly or hesitantly invested super-large AI computing centers, such as projects aiming to invest billions or tens of billions, will be affected.

"For large AI computing projects, the next six months to a year should be a period of observation," analyzed a senior AI computing expert. An enterprise applying large models estimated that the coming months will be a phase for application verification of DeepSeek.

However, large orders will still exist. "DeepSeek is not the endgame. Multi-modal large models, world models... The competition among models has only just begun," said an expert from a large model enterprise. "These training sessions still start with at least 10,000 GPUs."

02

Inference market: Public cloud giants are eager

"From the perspective of the inference market, over the past year, the deployment of large models has not yet truly entered a stage of explosive growth in inference and applications across various industries," said an AI computing expert. The low cost of DeepSeek may accelerate the entry of intelligent applications in various industries into a period of explosive growth.

The inference market is divided into public clouds and private clouds.

Public clouds are dominated by large enterprises. Two years after the launch of ChatGPT, large enterprises are eager. In the US, upon the release of the DeepSeek R1 open-source model, Microsoft, Amazon AWS, and even Chinese cloud companies officially announced related services. They have long anticipated the arrival of the inference market.

Microsoft CEO Satya Nadella even posted in the early hours after the release of DeepSeek R1: "Jevons Paradox strikes again! As AI becomes more efficient and easier to access, its usage will skyrocket, becoming a commodity that we can never have enough of."

Image sourced from Z Potencials WeChat public account

According to analyst Ben Thompson's analysis, the core economic reason for Microsoft's gradual distancing from OpenAI was that Microsoft was interested in providing inference services but was reluctant to pay for $100 billion data centers, as these models could be distilled and replicated, becoming cheaper before commercialization.

Currently, domestic public cloud enterprises and operator cloud computing platforms have integrated DeepSeek. Some sources told Digital Frontier that a large influx of users trying out the service has caused some cloud platforms to struggle to support the demand.

In addition to public cloud giants, the industry speculates that DeepSeek itself may also provide similar services. Years ago, MongoDB was an open-source database that eventually transformed into a SaaS service. "Large enterprises will take these open-source models and not give you a penny. US public clouds have long utilized a large number of open-source software to make money – this story will be repeated in China," analyzed a senior cloud computing expert.

03

Competing for the private deployment market

Apart from public clouds, another segment of the inference market is private clouds or private deployments. The industry has reached a consensus that this market is poised to explode.

Post-Spring Festival, an AI computing expert visited clients. "All listed companies are integrating DeepSeek," he told Digital Frontier. Enterprises are deploying their own local systems, integrating them with their businesses, whether it's AI digital humans, AI games, AI singers, AI programmers, or AI sales.

"It's like when a bunch of people were desperately using mainframes, and suddenly IBM introduced personal computers," said another AI computing expert. Mainframes were previously very expensive, with only a few countries able to afford them. IBM's introduction of PCs made them accessible to every household.

Enterprises will build "their own small AI computing centers," deploying 1 to 10 servers (within 100 GPUs) or 10 to 20 servers (100 GPU scale). "This is also the direction that all IT enterprises are promoting to clients this year," analyzed a senior server expert. In the coming months, enterprises will conduct application verifications, each showcasing their unique capabilities.

"Why private deployments? For example, can a law firm upload client contracts to the public cloud? That would be a breach of confidentiality, so there will be many business opportunities for private deployments," said a senior cloud computing expert.

However, everyone uses open-source large models, and the hardware is also homogeneous. Therefore, this year, technology service providers will differentiate themselves, with the core being to improve inference speed, cost-effectiveness, etc.

Hardware and software integration will become crucial. Cooperation between chip companies, server enterprises, solution providers, and private cloud service providers will become active.

In terms of business models, last year, the industry predicted that under the "mass model training" model, the concentration of computing power demand would increase. However, this year, various strategies are being adjusted to serve more medium and even small customers. As the granularity decreases, channel cooperation also becomes crucial.

04

Industry cautiously optimistic about the AI computing market

If large AI computing orders decrease in 2025 and fragmented orders for private deployments increase, will the overall Chinese AI computing market rise or fall?

Many remain cautiously optimistic. "If small enterprises buy one for 200,000 to 300,000 yuan this year, and larger enterprises buy two to three million, 10,000 enterprises will amount to 30 billion yuan. I actually think this number will increase year by year," said the aforementioned senior cloud computing expert.

"DeepSeek is equivalent to the Watt moment. After Watt improved the steam engine, achieving a stable power output, the steam engine was able to enter various industries," said the aforementioned server expert. "Large models are like steam engines, and after being improved, they can enter various industries."

He noticed that stocks of some specialized application enterprises, such as medical enterprises, have begun to soar. DeepSeek has made the application market more imaginative. "If it were previously, the only one making money was NVIDIA."

"Is it a large order that is large, or is it the sum of several small orders that is large?" The senior cloud computing expert further analyzed, "The significance of DeepSeek lies in the fact that highly accessible and cost-effective large models, similar to personal computers, will trigger an explosion in demand from ordinary enterprises."

"When intelligent applications become widespread, it will drive large-scale expansion and construction of computing power infrastructure," said an AI computing expert. This means that even if the computing power cards are cheap, the overall volume will not be small.

05

NVIDIA's share price is "tied" to GPT5

On the day DeepSeek released R1, NVIDIA's share price plummeted, with a single-day decline of 16.97%, and a market value evaporation of approximately $590 billion.

"Everything was previously best for NVIDIA, but now a small crack has appeared, and people are questioning its prospects," a senior chip expert told Digital Frontier. "NVIDIA's current gross margin has reached 90%, which is a bit too high. Its share price has long needed adjustment. It's not reasonable or sustainable for the entire industry's money to be earned by one company."

A noteworthy phenomenon is that although major US enterprises such as Microsoft, Google, Meta, and xAI have not reduced their capital expenditures (Capex), these companies are all developing their own chips.

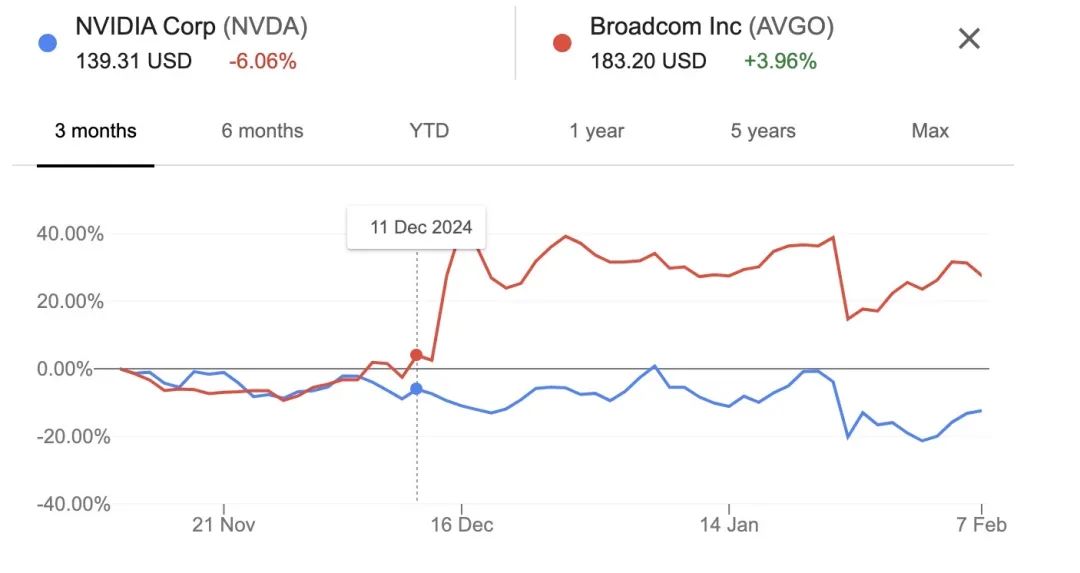

"Why did Broadcom's share price rebound faster than NVIDIA's? Broadcom makes ASIC chips (application-specific integrated circuits) for companies like Microsoft, Meta, and Google," said the aforementioned expert. ASIC chips have significant advantages in performance, power consumption, and cost, and these enterprises will reduce their dependence on NVIDIA in the future.

"The industry won't let one person rest for too long. You can't always win by doing nothing," said the aforementioned AI computing expert. "NVIDIA hasn't really rested these years, but essentially, it hasn't introduced anything revolutionary like CUDA for more than a decade." CUDA significantly improved industrial efficiency by providing rich development tools, library functions, and community support.

The aforementioned expert believes that from NVIDIA's actions in recent years, its cards are getting bigger and more densely integrated, essentially following the path of inertia. "In the CPU market, Intel used to dominate AMD, but now AMD's market value is three times that of Intel. You can't always win by doing nothing in a field; you have to stand up at some point."

Currently, NVIDIA's CUDA ecosystem has also integrated DeepSeek. "It's smart. If large models don't work, I'll do small ones. If demand decreases, I'll focus on edge devices," said the expert. At this year's CES, Jensen Huang began to focus on introducing the mid-to-low-end 5090 graphics card to compete in the inference market.

"NVIDIA's stock price depends on the effect of GPT5 after its release," a cloud computing expert told Digital Frontier. If GPT5 performs poorly, not much better than the current state, NVIDIA's share price will definitely crash. If GPT5 is amazing and people believe that breakthroughs can still happen, NVIDIA's share price will stabilize.

However, judging from the actions of Silicon Valley giants, with the explosion of the inference market, NVIDIA will face fierce competition in the future.

06

How can domestic computing power seize the opportunity?

In the inference market, the flourishing of computing power presents an opportunity for domestic computing power.

Currently, in addition to high-end chips, NVIDIA's 4090 and 5090 chips are also competing in the market, and some markets have begun to rise in price; domestic chips are accelerating their adaptation to DeepSeek to improve cost-effectiveness; in the global market, the three US inference chips Groq, Cerebras, and SambaNova all claim to have several times the performance of H100. They have supported the DeepSeek-R1-Distill Llama-70B model and improved inference speed.

How can domestic computing power seize this opportunity? The industry believes that the key lies in combining with software to improve inference efficiency.

On the other hand, "You see overseas enterprises and developers voting with their feet to use DeepSeek. In this case, is it possible for domestic computing power to go abroad along with large models?" said the aforementioned AI computing expert. "Don't just focus on the domestic market."

"In the previous wave of applications, frankly speaking, the cost-effectiveness of domestic computing power was not high," the aforementioned expert further said. Nowadays, algorithmic models have achieved lower computing power costs through innovation, and domestic computing power should also reduce prices. He believes that this is also the application end forcing the computing power end.

Large models will necessitate a reevaluation of chip design. DeepSeek's papers and related reports have already underscored the need for chip redesign. For instance, DeepSeek's technical architecture and optimization strategies offer fresh perspectives on the design of SoC chips, fostering advancements in edge computing chips and further catalyzing the development of customized AI chips.

"Technological iteration inherently oscillates between generalization and specialization..." remarked an AI computing expert. When generalized, technology thrives in an open environment, accelerating progress across the AI industry chain. When specialized, it prioritizes efficiency and cost in standardized scenarios.

07

Future Competition Will Intensify

The introduction of DeepSeek has ramped up competition within the industry.

"In the near term, DeepSeek will inspire a wave of innovative SMEs to embark on developing and deploying intelligent applications. In specific contexts, it may spawn popular intelligent applications, whose swift commercialization will spur significant demand in the inference market," analyzed an industry insider.

However, the industry concedes that AI has been overhyped by US companies as a premium product ripe for profit. With DeepSeek's emergence, offering extremely low costs, numerous individuals have rushed to experiment with it. Nonetheless, the application level of AI businesses remains nascent, and there is a dearth of talent that bridges both business acumen and AI technology across various industries. If popular AI applications fail to materialize in the short run, engagement will likely remain superficial and experimental.

Concurrently, can subsequent iterations of DeepSeek sustain the same rapid evolution as overseas large models? As DeepSeek advances, rival enterprises are also actively conducting research.

While recently evaluating DeepSeek, Elon Musk, while acknowledging its greatness, also stated, "Soon, you will witness companies like xAI unveiling models that surpass theirs." As these enterprises swiftly integrate DeepSeek technology with cutting-edge computing power, their evolution will likewise accelerate.

Future competition will undoubtedly intensify, yet DeepSeek's innovation is igniting and propelling the rapid growth of the domestic industry. An AI computing professional discovered that even an elementary school student can utilize DeepSeek to create a WeChat mini-program, and he himself has developed a Tetris game. "If everyone can access and run it, a solution is forthcoming. It's simply a matter of time."