Why Automakers' Functional Safety Concepts Lag Behind Rapid Advancements in Intelligent Driving?

![]() 04/18 2025

04/18 2025

![]() 560

560

Functional safety has retreated to a corner, neither impeding nor promoting the rapid progress of intelligent driving.

By Cao Lin

Edited by Mao Shiyang

Original content by Auto Pixel (ID: autopix)

01.

The Dilemma of Experience

After years of rapid technological and marketing advancements, L2 autonomous driving has finally encountered stringent regulatory scrutiny.

On the evening of April 16, the Equipment Industry Department I of the Ministry of Industry and Information Technology published a notice on the MIIT website, explicitly stating that "automobile manufacturers must clarify the system's functional boundaries and safety response measures, refrain from exaggerating and misrepresenting, strictly fulfill their obligation to inform, and effectively assume responsibility for production consistency, quality, and safety, thereby enhancing the safety level of intelligent and connected vehicle products."

In addition to the above notice, specific regulations (attached at the end of this article) have begun circulating within the industry. These regulations strictly limit L2 autonomous driving technology (hereinafter referred to as intelligent driving) in three aspects: promotion, usage norms, and supervision of over-the-air (OTA) updates by automakers.

Just before the Shanghai Auto Show, this sudden announcement disrupted the marketing strategies of many automakers. A marketing director of an automaker told us that many promotional materials had to be revised. It even affected their technology release schedule, with some automakers having to rediscuss their planned OTA content. The frequency of OTA updates will decrease, and small-scale rollouts of "public beta" or "early access" versions will no longer be allowed.

What exactly leads to the uncertainty regarding the "system functional boundaries" and "safety response measures" of L2 autonomous driving technology?

The advancement of L2 autonomous driving (hereinafter referred to as intelligent driving) has reached a point where a critical capability is "anthropomorphism," which is not only a technical goal for intelligent driving developers but also a focus of car reviewers in their evaluations and comparisons. This includes subjective feelings such as whether acceleration and deceleration are smooth, whether handling emergencies is calm and decisive, and whether following and overtaking other vehicles inspire confidence. These subjective feelings often vary depending on the environment, making it difficult to describe them clearly with a set of rules.

Anthropomorphism is the result of learning. Since two years ago, end-to-end large models have become the mainstream solution for intelligent driving, utilizing a vast number of cases to train artificial intelligence and allowing AI to autonomously learn how humans drive. Thus, the progress of intelligent driving functions has become the progress of AI large model capabilities, rather than the development of functions based on endless handwritten rules.

By introducing AI that learns directly from humans, most automakers' large models can achieve anthropomorphic driving. The greater the authority granted to the large model by the entire intelligent driving system, the stronger the anthropomorphic ability of the intelligent driving system tends to be.

However, to date, no automaker has trained a perfect large model. Large models may encounter problems they cannot handle and may generate incorrect results without realizing it. To avoid the latter, most automakers incorporate a set of rules (Planner) on top of AI to serve as a "backup." These rules avoid dangerous options among the various options selected by the large model. To avoid the former, the intelligent driving system can make the correct decision for L2 autonomous driving – to issue a warning and hand control back to humans.

However, the question of when and under what circumstances the system should exit and hand control back to humans remains a gray area, leading to issues with "system functional boundaries" and "safety response measures." Should the intelligent driving system exit when it is unsure or when it has no confidence at all? Or should it exit whenever there is the slightest doubt?

The designers of intelligent driving systems tend to prefer a later exit to enhance the user experience and improve a key metric – the number of takeovers per hundred kilometers. This is a value that both intelligent driving technology and automaker marketing teams pay attention to. For example, Xiaopeng's goal this year is to reduce the number of takeovers per hundred kilometers to one, demonstrating the advancement of its technology.

In a recent high-profile highway collision accident, the timeline released by the relevant automaker showed that the intelligent driving system suddenly exited and handed control back to humans 2 to 4 seconds before the accident, with the vehicle traveling at a speed of 116 km/h.

02.

Functional Safety Challenges

The aforementioned decision-making method of the intelligent driving system is not unique. When a rule-based algorithm serves as the last line of defense in an end-to-end model solution, the intelligent driving system will choose to exit in many situations it cannot handle. More common exits occur when traffic rules are violated, such as running a red light or crossing the stop line on red. In such scenarios, the intelligent driving system violates the clearly described boundaries in the rule-based algorithm and often chooses to exit.

However, besides clear-cut errors like running a red light, under the goal of anthropomorphism, many rules may not be as clear. For example, on a lakeside road with a speed limit of 20 km/h but no other vehicles, should the intelligent driving system exceed the speed limit? Or on a highway ramp where the speed limit suddenly drops from 120 to 40 km/h, should the intelligent driving system slam on the brakes before the sign to reduce speed below the required value, or should it operate anthropomorphically by slowing down gradually? These are issues that require trade-offs and standardization.

Currently, the standards that domestic automobiles must follow are gradually being enriched, such as GB 17675 on the basic requirements for vehicle steering and GB 21670 on the technical requirements for passenger car braking. These standards regulate performance, safety redundancy, warning signals, etc., in related fields. In addition to mandatory standards, there are also a series of recommended standards.

Although intelligent driving decisions primarily involve steering, braking, and acceleration, relying on the above standards' definitions of functional safety to achieve supervision over the safety of L2 autonomous driving is currently not feasible. Especially when end-to-end large models, which cannot be defined by functions, become the technical solution for intelligent driving.

End-to-end large models have one end for the input of sensory information, collected by all cameras and radars on the vehicle related to driving, and the other end for the output of driving decisions, with no other intermediate links.

The eliminated links include perception, prediction, planning, and control, which were the four basic modules of the previous generation of intelligent driving technology. At that time, intelligent driving developers needed to organize driving rules into code and write them line by line into the four basic modules. However, the industry eventually discovered that the situations encountered on the road are endless. The more engineers responsible for sorting out corner cases (special cases) a company has, the more problems they can identify and patches they can apply. The introduction of end-to-end large models has solved this problem.

However, for functional safety engineers, it is difficult to fully implement the concept of functional safety within an end-to-end framework.

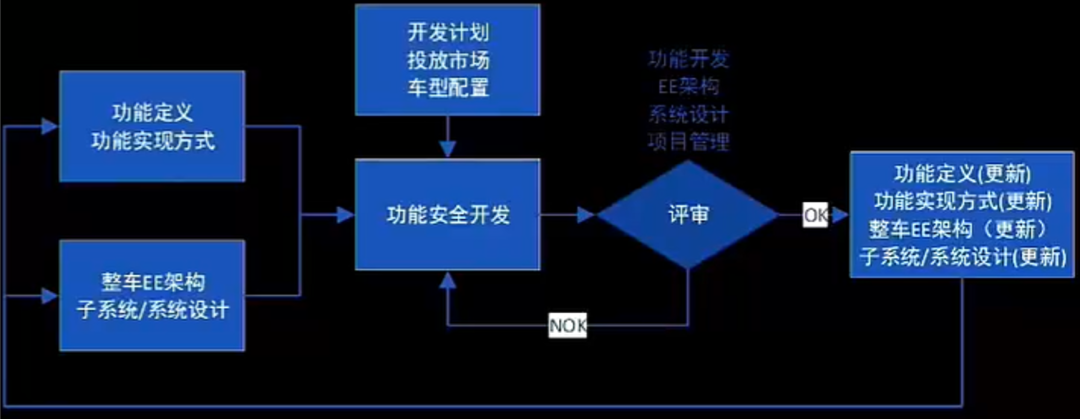

▍Functional Safety Concept of an Automaker

For example, an automaker that currently excels in functional safety in China begins to intervene from the early stages of a new vehicle's architecture design and function definition. During the vehicle model's development phase, functional safety is also synchronized. When functions are changed, the functional safety department needs to give its own opinion, judge which changes will affect subsequent results, and make corresponding adaptive changes to functional safety requirements. These requirements sometimes specify signals, interaction methods, and sequences. Finally, during the review stage after product development is completed, functional safety engineers will identify and inspect safety-related items in each subsystem.

However, the end-to-end intelligent driving large model is a "black box" that is difficult to inspect. No matter how thoroughly an automaker implements its functional safety concept, it is difficult to intervene in the first two stages and propose specific requirements throughout the entire process.

Additionally, current regulations regarding intelligent driving technology are relatively vague, making it difficult for automakers' functional safety departments to find standards for implementation. On August 1, 2022, the MIIT, in conjunction with the Quality Development Bureau of the State Administration for Market Regulation, issued the "Notice on Further Strengthening the Administration of Access, Recall, and Over-the-Air (OTA) Software Updates for Intelligent and Connected Vehicles (Draft for Comment)", which to some extent regulates automakers' OTA updates. Some automakers use OTA updates to address unreported product issues, and these loopholes will be closed as relevant regulations are improved.

However, these regulations mostly focus on outcomes and impacts, and the direction of standards that truly target the technology itself is still vague.

In contrast, the EU's General Product Safety Regulation provides another approach. Although it does not make detailed provisions on technical standards, once a product experiences a safety accident, the enterprise needs to provide sufficient evidence to prove that it has done its best to avoid responsibility, otherwise, it will face heavy fines. This forces enterprises to conduct "self-audits" during the research and development and production processes to establish high standards as much as possible.

Besides having a higher capability ceiling, the end-to-end large model also brings a benefit: it eliminates the process of information transfer between the four modules, avoiding the attenuation of sensor-collected information during transmission between modules.

This makes it feasible to reduce the use of expensive sensors, such as reducing or removing lidar sensors, and deploying intelligent driving technology to cheaper vehicle models. As intelligent driving technology becomes more prevalent, it is indeed imperative to regulate the safety boundaries of the technology.

Attachment: Online Content on Intelligent Driving Supervision

Volkswagen is renowned for its complex processes, which ensure product reliability, steady iteration of technology, and enable brands such as Volkswagen, Audi, Porsche, and even later additions like Bentley and Bugatti to share resources. Without the technological transformation driven by new energy and intelligence, Volkswagen has the ability to rely on this system to maintain its leadership in fuel vehicle technology and sales.

I. Regulating Automaker OTA

(1) Tighten the review of automaker OTA updates, requiring automakers to reduce the frequency of OTA updates and ensure thorough verification before each OTA update is deployed. In case of an emergency requiring an OTA update, automakers need to follow the recall and production suspension process, and new OTA requirements must be approved by the State Administration for Market Regulation before deployment;

(2) Disallow "public beta" testing under any name. Regardless of the number of users involved, the announcement process must be the same as for full versions.

II. Regulating Technology Promotion

(1) Prohibit the use of terms such as "autonomous driving," "self-driving," "intelligent driving," "advanced intelligent driving" in promotions, and instead use "intelligent driving level + assisted driving" for description (e.g., L2 assisted driving);

(2) Prohibit the use of terms such as "valet parking," "one-click summon," "remote control";

(3) Disallow the use of "takeover" in L2-level promotions and descriptions of "hands-off" or "eyes-off";

(4) Use full Chinese names whenever possible. Even if English is used, the full Chinese name must be provided the first time.

III. Regulating the Promotion of Capabilities Related to Usage Methods

(1) Urge manufacturers to technically prohibit drivers from disengaging and require face ID recognition for the use of driving assistance. Adjusting the seat or reclining it is prohibited while driving assistance is active;

(2) Manufacturers cannot use "driver visual disengagement" to suppress or disable "driver physical disengagement." For "physical disengagement" lasting 60 seconds, users need to provide a reasonable explanation;

(3) No longer accept functions that cannot ensure the driver's full control, such as "valet parking," "one-click summon," "remote control";

(4) Even if the vehicle's intelligent driving capabilities avoid accidents when the driver cannot respond, users still need to be penalized;

(5) L2-level assisted driving capabilities such as LCC and NOA need to undergo "collision avoidance tests," with detailed and comprehensive information in the test reports;

(6) For automakers using digital simulations to model the application scenarios of assisted driving, they need to provide an evaluation of the feasibility of their entire simulation system.

This is original content by Auto Pixel (autopix)

Unauthorized reproduction is prohibited